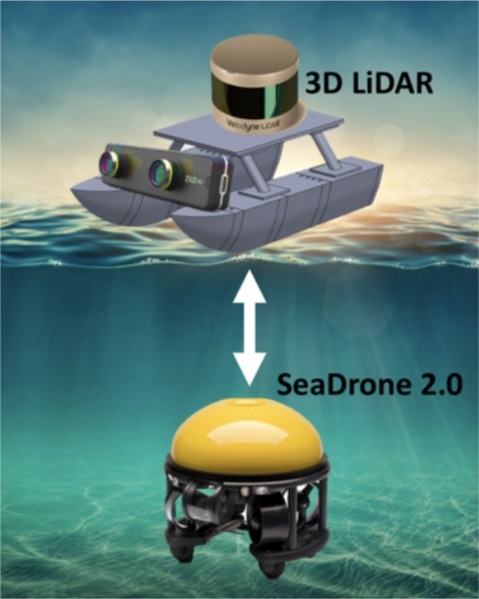

This course aims at training students to participate world-class competition, and we will target the RobotX Competition (https://www.robotx.org/) in 2018. This course will cover the fundamental and advanced domains in vision for mobility tasks, including the challenges combining object recognition, pose estimation, motion planning, and SLAM. We will systematically study each components from previous winning teams in 2014 and 2016, as well as cutting-edge methods that may better improve the performance. Students will form teams to develop term projects, and we encourage in-class discussions and presentation skills with a white board for class participations. This course is a "learning-by-doing," and include in-class lab/tutorial in each teaching module. We will use "Duckietown" (an open course "MIT 2.166 Autonomous Vehicles) as platform, and focus advanced topics using Gazebo, 3D perception, and deep learning.

We have in-class tutorial/lab each week, and the lab materials typically include 3 specific tasks (programming / algorithm / system work). and students are expected to finish the lab materials during the class.

There will be no midterm/final exams.

Instructor

Teaching Assistant

Teaching Assistant

Teaching Assistant

Teaching Assistant

| Week | Date | Topic | Lecture/Discussion | Lab |

|---|---|---|---|---|

| I | 2/22 | Gazebo | IGazebo and Virtual Challenges | Self-evaluation |

| II | 3/1 | Introduction to Robotic Vision and RobotX Competition | Subscribe/Publish ROS Messages in Gazebo | |

| III | 3/8 | Gazebo for Marine Robotics | Gazebo USV I | |

| IV | 3/15 | Duckietown Lane Following Revisit | Duckietown Virtual Lane Following | |

| V | 3/22 | From DT to RX | AprilTag; How to Read a Research Paper | AprilTag Navi |

| VI | 3/29 | HSV Filter & FSM | Light Buoy Sequence Detection | |

| VII | 4/5 | Image Features: Harris Corner, FAST, SIFT, & MSER | Placard Feature Detector | |

| VIII | 4/12 | Bayes Filter | GPS Navi | |

| IX | 4/19 | Midterm Proposal Pitch | ||

| X | 4/26 | 3D Perception | 3D Sensors and Data Logging | SVelodyne & RGBD Camera Calibration |

| XI | 5/3 | Point Cloud and ICP | PCL Normal RANSAC ICP | |

| XII | 5/10 | Laser-Based Feature Detection | 3D Feature Tracking | |

| XIII | 5/17 | Deep Learning | Intro to Deep Learning | Layer Computation |

| XIV | 5/24 | Network Structure | Network Structure and Surgery | |

| XV | 5/31 | DL Prediction | Prediction from Pre-trained Models | |

| XVI | 6/7 | DL Training | Training with Freiburg Groceries | |

| XVII | 6/14 | Feature Engineering vs. Deep Learning | Placard Deep Learning | |

| XVIII | 6/21 | Final Presentation |

If you are interested, please visit our ARG-NCTU website or contact us with following information.

(EE627)1001 University Road, Hsinchu,

Taiwan 30010, ROC

David Chen (TA)

ccpwearth@gmail.com